Implementing a Neural Network in Python

Introduction

This past summer, I enrolled in CS188 at UC Berkeley, an introductory course in Artificial Intelligence. Additionally, for my third semester project at Epita, I worked with neural networks. These experiences inspired me to write a blog post on the topic.

What is the XOR problem?

The XOR problem is a problem in which we have two inputs. True or False for each input. and we want to return True if the two inputs are different, and False if they are the same.

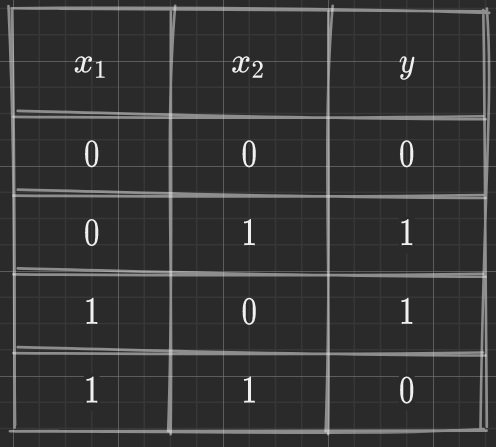

Here is the truth table for the XOR problem:

What is a Neural Network?

A neural network is a machine learning model that is inspired by the human brain. It is made up of layers of neurons, which are connected to each other by synapses. Each neuron takes in a set of inputs, multiplies them by a set of weights, and then passes the result through an activation function. The output of the activation function is the output of the neuron. The weights are adjusted during training to minimize the error between the output of the neural network and the expected output.

A Xor neural network

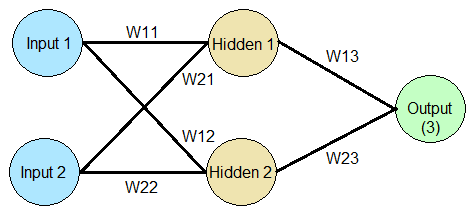

Note that the xor problem is not linearly separable, so we cannot solve it with a single layer perceptron. We need a neural network with at least one hidden layer.

So here the Hidden 1 neuron will take input1 * W11 + Input2 * W21. Then we will pass this to the activation function, which will give us the value for the Hidden 1 neuron.

Same principle for Hidden 2, but with Input1 * W12 + Input2 * W22.

Once the values for Hidden 1 and Hidden 2 are calculated, we can calculate the value for Output using the same principle.

The Activation Function

The activation function is a function that takes in a value and returns a value. It is used to introduce non-linearity into the neural network. There are many different activation functions, but one of the most common is the sigmoid function.

The sigmoid function is defined as:

def sigmoid(x):

return 1 / (1 + exp(-x))

Here is a graph of the sigmoid function:

Backpropagation

I won’t get into to much detail about backpropagation, but here is a brief overview. Backpropagation is the process of adjusting the weights of the neural network to minimize the error between the output of the neural network and the expected output. It is done by calculating the gradient of the error function with respect to each weight, and then adjusting the weights by a small amount in the direction of the gradient.

The Code

Here is the repo link for the code, not that I used matrices to store data for the Weights,Values,etc.. to make the code more efficient. Here is the github link and a screenshot of the code running.

Conclusion

I hope you enjoyed this blog post and everything was clear. If you have any questions, feel free to reach out to me Contact.